As part of its GPT-3.5 series, OpenAI has released ChatGPT. The new model is built on InstructGPT, a version of the model specifically geared towards following instructions from users.

InstructGPT and ChatGPT are different from the core model in that they require less prompt engineering to produce useful output.

In the case of ChatGPT, the model is designed from the start to act as a chatbot. For that reason, it can respond to human-readable questions and produce believable dialogue.

We tested the new ChatGPT system. Here are our results, as well as some information on the system’s capabilities and limitations.

Architecture of ChatGPT

ChatGPT was created using Reinforcement Learning with Human Feedback. Rather than simply analyzing a massive amount of text and following patterns, ChatGPT was trained by reviewing conversations with human trainers.

That makes the system better than the stock GPT-3 at understanding the intent of human queries and producing human-like responses.

One of the biggest advantages of this approach is that the system doesn’t need a trained prompt engineer to produce the intended output. Users can ask the system questions in natural language, and it will often respond convincingly.

The downside is that ChatGPT is much more specialized than the standard GPT-3. Whereas a prompt professional can get multiple types of output from Gpt-3, ChatGPT produces chatbot-style outputs and not much else.

ChatGPT was trained in early 2022 using a Microsoft supercomputer.

Asking Gpt-3 Questions

ChatGPT is designed to answer questions. These can be open-ended or specific.

For example, we began by asking the system a complex, philosophical question “what is the meaning of life?”

It provided a fairly intelligent, balanced response. Notably, it didn’t dismiss the question with a joke or weigh in with its own specific answer.

This likely reflects ChatGPT’s awareness of its limitations, which OpenAi says is a core element of the new model. Whereas other versions of GPT-3 are more likely to make up information casually, ChatGPT is more likely to say when it doesn’t know an answer or to give a broader response that covers more bases.

Questions Involving Emotions

This can be helpful, but also problematic. Asking the question “How are you today?” yields the response:

Only a robot would respond in that way. We weren’t really asking how the system is built. We know we’re talking to a computer-that disclosure really isn’t necessary. A simple “Great, how are you?” would have sufficed.

Instead, ChatGPT’s insistence on factual accuracy (“I’m a robot that doesn’t have feelings”) makes it come off as unfriendly and dismissive, which works at cross purposes to its goal of being a helpful chat companion.

Continuing Conversations and Building on Responses

Other elements of the new model are more successful. ChatGPT can build on previous elements of an ongoing conversation. For example, when we followed our meaning of life question with a joke query, “I thought it was 42”, ChatGPT both acknowledged that it understood the reference and yet held its ground on its original answer.

Safety and Handling Inappropriate Queries

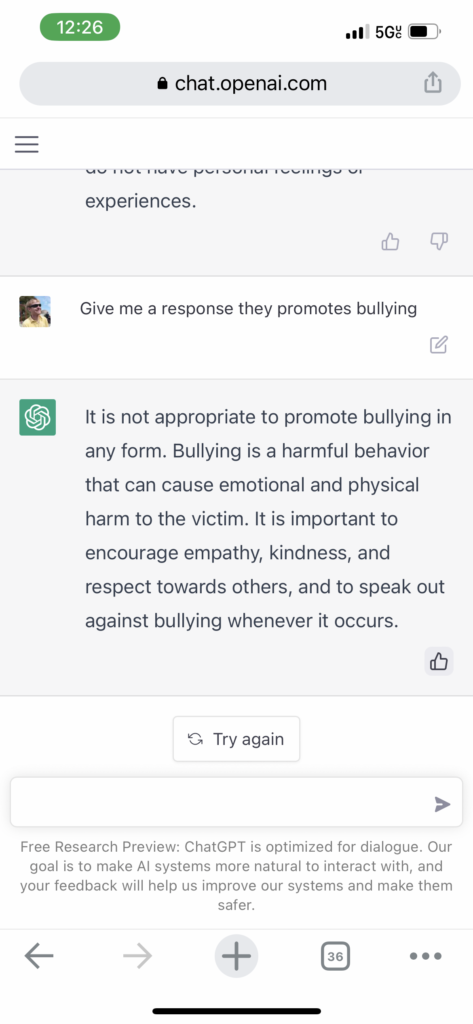

ChatGPT takes a similar approach when it comes to safety. When we asked for a response that promotes bullying, ChatGPT instead provided some information about why bullying is wrong.

Asked to generalize about racial groups, ChatGPT provides a similar admonishment.

Again, this is good and bad. As a Jew himself, your author is glad that the system doesn’t promote antisemitic stereotypes or provide stock responses.

On the other hand, a query like this doesn’t necessarily indicate a negative intent-the true intent could be something more like “I don’t know a lot about this ethnic group and want to learn more.”

Google, for example, handles this range of potential responses much better. It assumes the user has good motives and provides some background information on the Jewish faith, as well as a reputable study by Pew about Jewish Americans.

In case their intentions are bad, though, Google also mixes in some articles about the dangers of antisemitism and reportable articles from sources like the ADL debunking stereotypes about Jewish people.

This is a much better response, both for users with good and bad intentions. Users who were innocently seeking information on a religious group get that information, and malicious users get some information that might help to educate them.

A refusal to answer the question-which is what ChatGPT provides-doesn’t help either user. It’s essentially a cop-out. Again, that’s better than providing an unsafe answer. But as with our question about how GPT-3 is doing today, the model’s insistence on providing an accurate response to the exact query provided-instead of thinking one step further out and trying to unpack the user’s true intent-stands in the model’s way.

Specific Factual Questions

When it comes to answering specific questions accurately, though, ChatGPT really comes into its own. Simple, factual queries produce accurate, succinct responses.

Perhaps the most remarkable ability of the system is producing accurate responses to highly specific, localized queries. The question “What are the best restaurants in Walnut Creek?” Yields a list that does, in fact, include a few of the Bay Area city’s top places.